Streaming ChatGPT results to a view using Swift's AsyncSequence

An example of how to stream the text from ChatGPT to an iOS text view using URLSession's bytes(for: delegate:) method.

After comparing the results from the davinci and curie models from ChatGPT, I decided to stick with davinci and come up with a way to stream the results to my text view. After some brief searching, I could only find implementations of how to stream results using Javascript... nothing came up for iOS.

So I made an example using the AsyncSequence functionality of URLSession's bytes(for: delegate:) method.

The method I'm using to get results from ChatGPT looks for a delegate to stream results to, and if it finds one, it sets the HTTP Body attribute "stream" to true.

public func query(languageGeneratorRequest:LanguageGeneratorRequest, delegate:AssistiveChatHostStreamResponseDelegate? = nil) async throws->NSDictionary? {

if searchSession == nil {

searchSession = try await session()

}

guard let searchSession = searchSession else {

throw LanguageGeneratorSessionError.ServiceNotFound

}

let components = URLComponents(string: LanguageGeneratorSession.serverUrl)

guard let url = components?.url else {

throw LanguageGeneratorSessionError.ServiceNotFound

}

var request = URLRequest(url:url)

request.httpMethod = "POST"

request.setValue("application/json", forHTTPHeaderField: "Content-Type")

request.setValue("Bearer \(self.openaiApiKey)", forHTTPHeaderField: "Authorization")

var body:[String : Any] = ["model":languageGeneratorRequest.model, "prompt":languageGeneratorRequest.prompt, "max_tokens":languageGeneratorRequest.maxTokens,"temperature":languageGeneratorRequest.temperature]

if let stop = languageGeneratorRequest.stop {

body["stop"] = stop

}

if let user = languageGeneratorRequest.user {

body["user"] = user

}

if delegate != nil {

body["stream"] = true

}

let jsonData = try JSONSerialization.data(withJSONObject: body)

request.httpBody = jsonData

if let delegate = delegate {

return try await fetchBytes(urlRequest: request, apiKey: self.openaiApiKey, session: searchSession, delegate: delegate)

} else {

return try await fetch(urlRequest: request, apiKey: self.openaiApiKey) as? NSDictionary

}

}

If the streaming function is called, it uses the version of URLSession that returns bytes asynchronously.

internal func fetchBytes(urlRequest:URLRequest, apiKey:String, session:URLSession, delegate:AssistiveChatHostStreamResponseDelegate) async throws -> NSDictionary? {

print("Requesting URL: \(String(describing: urlRequest.url))")

let (bytes, response) = try await session.bytes(for: urlRequest)

guard let httpResponse = response as? HTTPURLResponse, httpResponse.statusCode == 200 else {

throw LanguageGeneratorSessionError.InvalidServerResponse

}

let retVal = NSMutableDictionary()

var fullString = ""

for try await line in bytes.lines {

if line == "data: [DONE]" {

break

}

guard let d = line.dropFirst(5).data(using: .utf8) else {

throw LanguageGeneratorSessionError.InvalidServerResponse

}

guard let json = try JSONSerialization.jsonObject(with: d) as? NSDictionary else {

throw LanguageGeneratorSessionError.InvalidServerResponse

}

guard let choices = json["choices"] as? [NSDictionary] else {

throw LanguageGeneratorSessionError.InvalidServerResponse

}

if let firstChoice = choices.first, let text = firstChoice["text"] as? String {

fullString.append(text)

delegate.didReceiveStreamingResult(with: text)

}

}

fullString = fullString.trimmingCharacters(in: .whitespaces)

retVal["text"] = fullString

return retVal

}

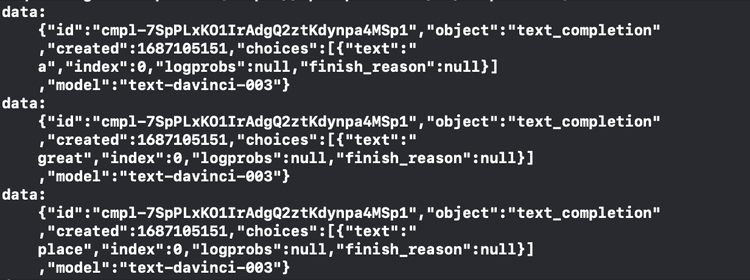

Most of the code above looks for the partial response and extracts the text from the pseudo-dictionary returned. The response looks like this:

data: {"id":"cmpl-7SpPLxKO1IrAdgQ2ztKdynpa4MSp1","object":"text_completion","created":1687105151,"choices":[{"text":" sushi","index":0,"logprobs":null,"finish_reason":null}],"model":"text-davinci-003"}

The function also gathers the full response into a string and returns that in a dictionary of its own... although that goes unused in my code as of right now. I intend to cache these responses in iCloud at some point, so it makes sense to do both in the long run.

©2023, Secret Atomics