Measuring and Visualizing Motion of the Body Relative to Itself

The General Tau Theory proposes a metric to measure the perception and execution of motion of the body in relation to itself. Using depth cameras to capture the change of the relative position and angle of triangles formed by the joints of the body generates visual results representing the values of Tau and Tau Dot.

As a response to the ArsElectronica call for papers around the subject, I prepared the following abstract and supporting materials.

Unsupervised Training Using Visualizations of a Performer's Coupling of Tau Guides in VR or XR

We are looking for thought-provoking proposals that present innovative perspectives on working in expanded animation with the live body in motion.

Abstract

David Lee's work studying perception and motion considers the organism acting as a unified whole in dynamic relations with its environment. The theory lays groundwork for building a system of expropriospecific-driven gestural intents that is unique to each individual. Rather than teaching everyone how to learn to type on the same keyboard, each person can define their own method of typing using their own personalized set of keys.

Lee observes that skilled movements are continually calibrated to the internal sense of change that provides a frame of reference for external change. The time to closure of a motion gap at its current rate of closure, or “Tau”, represents the changing gap between the state an animal is in and the state it wants to be in. In the case of a gesture's change of position, the first-order time derivative is velocity, so Tau may be written as:

τ(t) = x(t) / v(t)

The fourth principle tenet of the theory is, "A principle method of movement guidance is by tau-coupling the taus of different gaps, that is, keeping the taus in constant ratio."

τ(x) = k • τ(y)

Skeleton tracking affords us the opportunity to measure the body’s motion in real-time. Defining 67 triangles with skeleton joints of the body, and how their relationships change over time, encapsulates one possible measurement of tau-coupling by a performer. A performer should be able to teach a multi-class classification ML model how to recognize coupled taus into unique but repeatable gestures by supervising the training of the system using visualizations of these measurements.

After training the system to recognize a new gesture, the performer may assign the gesture to an intention fulfilled by the surrounding systems controlling their environment. For example, at home, one might connect one’s own gesture pattern recognizer to the API bridges in IFTTT to signal systems like Phillips Hue. On stage, the control of stage lights of a performance might be shared between the dancers’ interplay. One might one day carry an agent that maps one’s own personal gesture system onto any available digital input.

Visualizing the coupling of taus so that the performer might identify complex motion as a single gesture is the first step to training a classification system. One option is to represent the value of coupled taus using color, scale, and motion of meshes surrounding a skeleton in Unreal Engine. Visualizations are then viewed in VR or without a headset in an immersive environment like Jake Barton’s Dreamcube.

Lee, David N. “Guiding Movement by Coupling Taus.” Ecological Psychology 10.(3-4) (1998): 221-50. Perception Movement Action. Perception-Movement-Action Research Centre. Web. 19 May 2011. Lee, David N. How Movement Is Guided. MS. University of Edinburgh. Perception Movement Action. Perception-Movement-Action Research Centre. Web. 19 May 2011. Lee, David. N. Tau in action in development. In J. J. Rieser, J. J. Lockman, & C. A. Nelson (Eds.), Action as an Organizer of Learning and Development. (2005): 3-49. Hillsdale, N.J.: Erlbaum. Local Projects, DreamCube

Past Work

The videos below show my explorations starting around 2011-2013 of how the General Tau theory could be applied to skeleton tracking and then visualized as an image pattern of relationships.

My work evolved from visualizing skeletons in 2D using Processing and a Kinect over ten years ago to most recently using Unreal Engine and an Intel DepthSense camera to render a skeleton, visual effects, and live image textures of tau and tau-dot in 3D in real-time.

The explorations toward the bottom include showing how gestures can abstracted into particle systems and reimagined into the space around a performer. In addition to the skeleton, the performer can choose to see the raw pattern of Tau and Tau-Dot's being fed along to a multi-class classification model, or the value of the taus can be represented using color, scale, and motion of particles surrounding the skeleton.

Exploring the visualization that connects a performer to the training recognized by the classification model should then allow the performer to arbitrarily choose how to perform a gesture and assign it to express an intention. At first, particle systems are used to create the training, however, eventually, the training should be embedded into the systems watching the performer so that other manifestations are possible given the unique intents derived from a captured performance.

Visualizing the centroid, circumcenter and Euler line of a triangle. Processing, circa 2013

This demo shows the geometry used to measure the relative change of position and angle of the Euler Line created by a triangle attached to three joints of a tracked skeleton.

Debugging Euler lines of skeleton tracking in 2D. Processing, circa 2013

This demo shows the triangles and Euler Lines used to measure Taus from a tracked skeleton. The activation of Euler Line Taus when moving joints of the body is represented by the colors of the lines.

Visualizing position and angle tau while doing jumping jacks Processing, circa 2013

The grid here shows the position and angle taus between joints of the body that are activated by a tracked skeleton of a person performing jumping jacks.

Visualizing changes of position and direction w.r.t the body Processing, circa 2011-2013

The graphs shown in this demo track the magnitude of relative motion between joints of the body while tracking a skeleton.

Visualizing the tau of dancing Processing, circa 2013

The grid here shows the position and angle taus of a tracked skeleton for a performer that is dancing. Repeatable patterns should be recognizable as complex gestures by a classification system.

Skeleton Tracking in VR Unreal Engine, 2023

This demo shows a VR POV of a tracked skeleton represented by outlining the skeleton with Niagara particle systems generated in Unreal Engine.

Visualizing a dancing skeleton using abstract particle systems Unreal Engine, 2023

This demo shows an abstracted version of the motion of a tracked skeleton that is being represented by the motion of particle systems. The goal is to replace the humanoid character with an expression of motion that is not tied to representation of the performer's body.

Visualizing external particle systems effects using body tracking Unreal Engine, 2023

This demo shows how particle systems that are external to a tracked skeleton are affected by motion of the body.

VR POV Capture of Particle Systems affected by body tracking Unreal Engine, 2023

This demo shows a VR POV of an abstracted skeleton composed of particle systems that is moving external objects relative to the motion of the body.

Dreamcube pre-visualization of particle systems affected by body tracking Unreal Engine, 2023

This demo shows a visualization of the interior of a Dreamcube that is tracking a skeleton and influencing the motion of particle systems relative to the motion of the skeleton's hand joints.

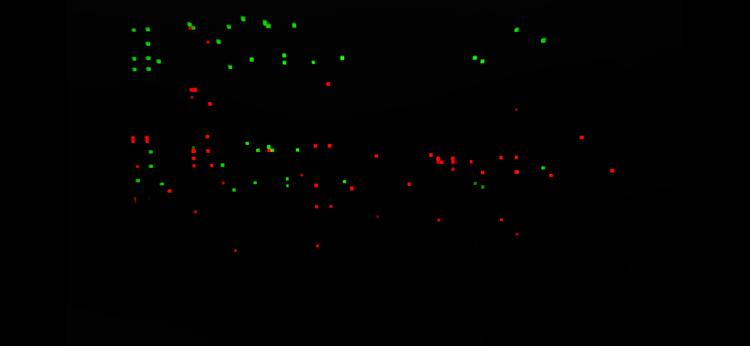

Capturing 3D Tau and Tau-Dot in Textures in Real-Time Unreal Engine, 2023

This demo shows render targets generated in real-time in Unreal Engine by calculating Tau and Tau-dot for the position and angle of relative motion of 67 triangles formed by joints of a tracked skeleton. The image here could potentially be used as an input to an image classification system trained to parse complex motion into discrete gestures.

Visualizing Tau Dot of Angle Changes Between Joints Unreal Engine, 2023

This demo shows 67 triangles colorized by the value of the Tau Dot of the change of angle between joints.

Next Steps

- Continuing to assign visual effects to Tau and Tau-Dot to see if a recognizable pattern generator can be achieved

- Trying to reduce the latency on generating the tau and tau-dot visual

- Finding a meaningful scale for Tau and Tau-Dot for position and angle changes

- Feeding Triangle positions, orientations, Euler lines, Tau, and Tau-Dot into a multi-class classification model to see if meaningful categories can be found for gesture patterns

- Assigning intention via recognized/predicted gestures

- Write a paper answering the following questions:

In what new ways can the properties of human kinaesthetics be applied to animation? How can we counter the algorithmic biases built into the fabric of motion capture systems and the under-representation of different demographics in motion capture libraries? How might the technologies of surveillance, motion detection and capture be subverted and used for new artistic purposes? How can the space in which performance takes place be animated and what impact does this have on performer and audience experience? How can AI technology revolutionize/change the way we will animate human bodies? What does it mean to have a body in interactive animated environments (metaverse, games, VR)?

©2023, Secret Atomics