Secret Atomics

Secret Atomics consults with event organizers, product developers, and design leads to create interactive experiences for education and entertainment in iOS or Unreal Engine. We have over twenty years of experience helping launch new products with venture-backed startups and companies like Google, Samsung, and Apple.

Tips on Tasks from ChatGPT 4o

Improving a real-time frame rate by asking ChatGPT 4o to optimize your code.

Tula House Privacy Policy

Privacy Policy for Tula House

Know Maps Privacy Policy

Privacy Policy for Know Maps

Exploring Data Visualization on the Vision Pro

I used a previous sketch of an LED strip along with some ShaderGraphMaterial modifiers to create an exploration of data visualization for the Apple Vision Pro.

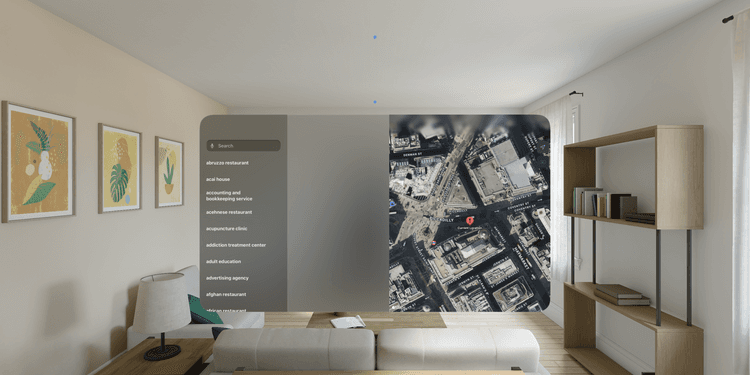

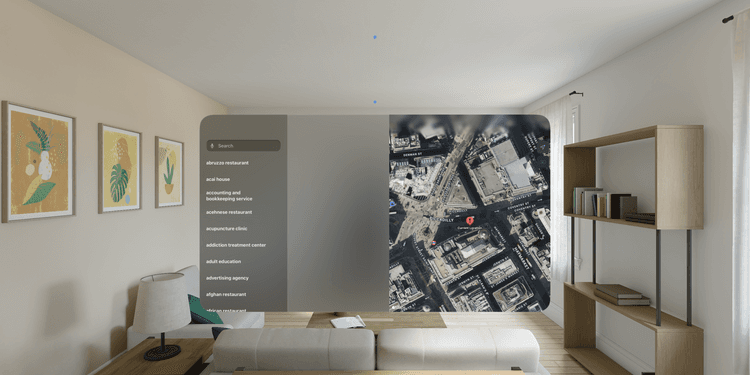

Know Maps: A VisionOS Place Discovery App

Know Maps is a place discovery app that uses the Foursquare API to search for any place nearby by its categorical type. Know Maps also creates an honest description of a business based on it's reviews using the GPT4-Turbo LLM model.

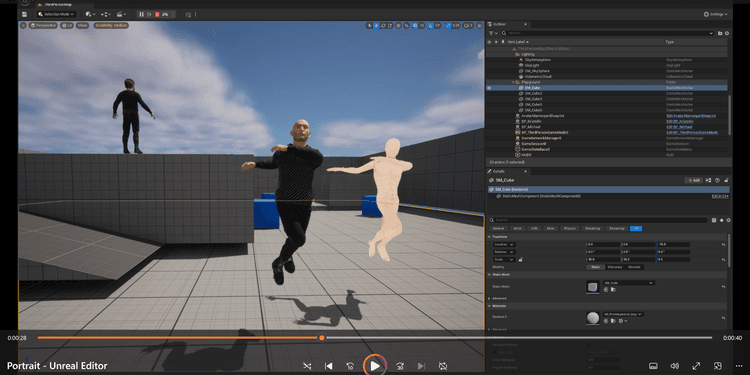

Using Nuitrack skeleton tracking to drive a Metahuman skeleton

I used the new Nuitrack plugin for UE5 to drive a MetaHuman skeleton using my depth camera

Creating a MetaHuman with an Intel RealSense depth camera

I used a depth camera to take a single image and turn it into a mask for an Unreal Engine Metahuman.

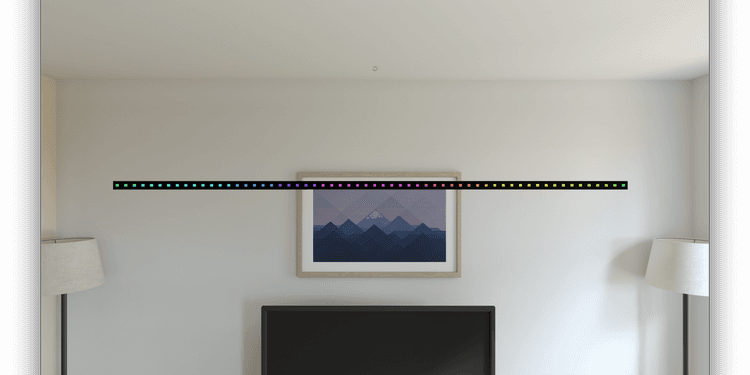

Modeling an LED Strip in VisionOS

I modeled an LED strip to test out the Blender to Reality Composer Pro to Xcode pipeline for animating custom shader material attributes such as the Hue of the emissive color LED light.

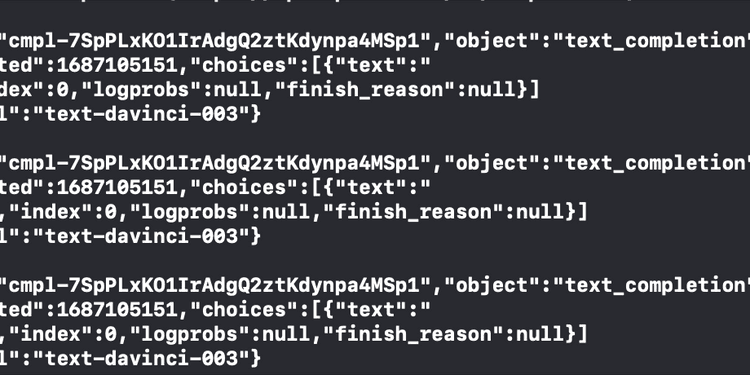

Streaming ChatGPT results to a view using Swift's AsyncSequence

An example of how to stream the text from ChatGPT to an iOS text view using URLSession's bytes(for: delegate:) method.

The dilemma of using ChatGPT in the interview process

So far, I've used ChatGPT three times in the interview process, mostly to do things that I did not have sufficient time for or felt like doing on my own. Representing yourself as the amalgamation of ChatGPT answers presents a dilemma when answering questions, or say, doing take home technical tests, in the interview process.

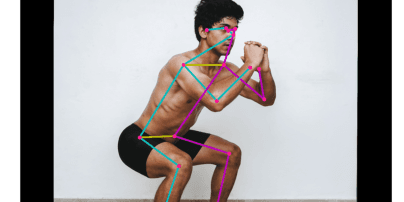

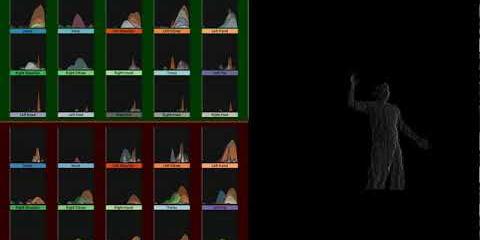

Measuring and Visualizing Motion of the Body Relative to Itself

The General Tau Theory proposes a metric to measure the perception and execution of motion of the body in relation to itself. Using depth cameras to capture the change of the relative position and angle of triangles formed by the joints of the body generates visual results representing the values of Tau and Tau Dot.

Tips in implementing Tensorflow Lite to use movenet models in C++

I built Tensorflow and Tensorflow Lite from source in order to write my own C++ implementation of a model that uses Movenet to track up to 6 skeletons. I've included some tips on how to implement and interpret the model in this documentation.

Working across platforms and teams on Google Maps

My time at Google covered working on several different areas of development, blending user journeys and projects across teams as examples to pitch to executives. During my time there, I developed solutions in iOS, watchOS, and Unreal Engine.

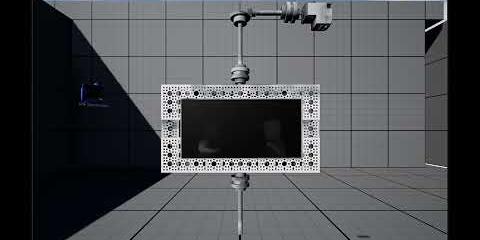

Iterating the Design of a Volumetric Display Retrospective and v3 Plan

Secret Atomics volumetric display design has gone through three iterations: from inanimate object to mechanized display driven by Unreal Engine.

About the Author

Michael is a freelance developer at Secret Atomics with nine years of employment developing iOS applications, data explorers, interactive installations, and games, and he has over twenty years of experience helping launch new products with venture-backed startups and companies like Google, Samsung, and Apple.

Comparing VR Rendering Models

For the past several months I've been working on building environments for the Oculus Quest. While deploying my environments to device, I learned a few things about what makes lighting in VR look good on device, such as the fact that SM5 shows (much) better results than Vulkan, which means tethering via USB is sometimes advised. Running on PC is always going to be better than mobile, but how much better SM5 on PC is than Vulkan in device is pretty significant.

New Years Eve Party

On New Years Eve 2022, some friends from the Lizard Lounge threw a party at Brooklyn Burj in Crown Heights, Brooklyn. Secret Atomics helped the organizers produce lights and projections for three event spaces.

Rendering from Unreal Engine 5 to LED Panels over a wireless connection

I connected Unreal Engine to a Raspberry Pi via some C++ support classes that implemented a custom buffer carrying data from a scene capture component's texture memory across an MQTT broker to a wireless subscriber driving the LED Panels with a custom C++ driver. At 128 x 64 pixels with 8 bit color depth, the connection across a local router exceeds 90 Hz.

Creating a Youtube VR video in Unreal

I have been setting up an environment that I can render into Youtube VR from Unreal. In order to get something that is representative of a high fidelity environment, I first took time to create a landscape, paint it with blended layers, and add assets and textures downloaded from Quixel.

Rebuilding a VR Landscape

I took 17 steps to set up a landscape environment for VR.

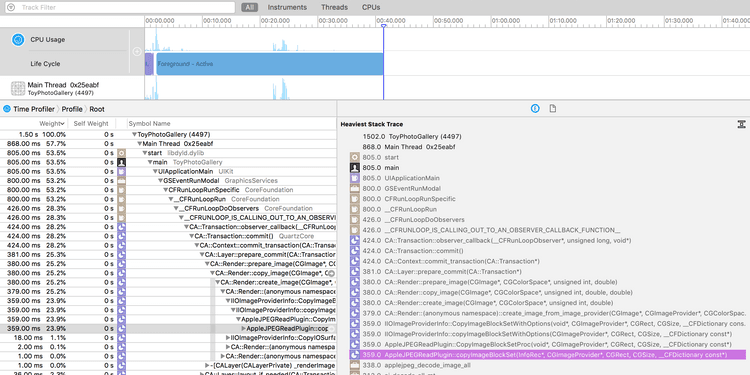

Eliminating Collection View Tearing with Xcode's Time Profiler Instrument

Using the Time Profiler to refactor collection view cell model image fetching and pull Parse's return call off the main thread enables smooth scrolling.

Prototyping with Tensegrity

'Tensional integrity' describes compressed components balanced with tensioned components into a stable self-equilibrated state. One can think of bones and muscles in the skeletal system as demonstrating a similar type of balance.

Iterating the Design of a Volumetric Display

Eric Mika and I started in on a project to make a cylindrical volumetric display using off the shelf hardware like a drill and a long steel rod from Home Depot. The project evolved into iterations designed around first transferring LED data through a slip ring, then trying to minimize the harmonic vibration in the rig by changing the size and the shape of the display, and recently, increasing the density of the lights along with networking drivers together.

Generating Interactive Particle Systems in OpenCV and SpriteKit

This project is a proof of concept for capturing infrared video from a homebrew camera, sending the frames through OpenCV to generate keypoints and contours, and then forwarding that data into SpriteKit as the source vector for particle systems and other visual highlights. The video analysis and particles are processed in real-time on device.

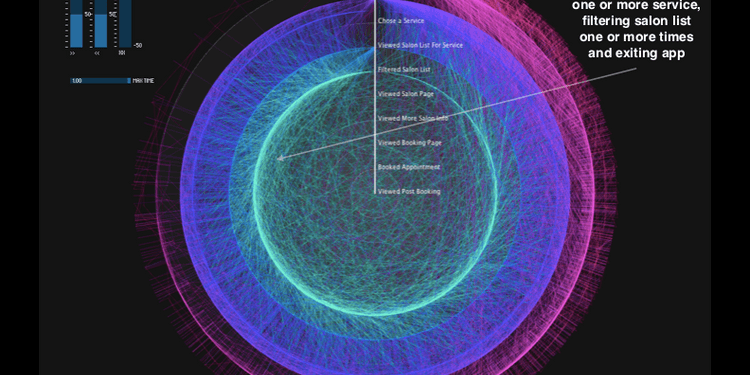

Experimenting with Data Explorers

Interactive data explorers can help refine questions for better collection and suggest new areas of inquiry. Data explorers often attempt to filter sets of data to reveal patterns in expected behavior described by an axis. For example, user experience patterns can be uncovered by installing behavior tracking in mobile applications. Data explorers can also incorporate predictions modeled from proprietary data sources. No matter what the source of the data might be, carefully defining the graph's dimensions and filtering mechanisms can help reveal interesting patterns hidden inside a complex tracking system.

Detecting Gestures from the Motion of the Body in Relation to Itself

Measuring the motion of a skeleton's joints in relation to each other generates signals that might be recognized as gestures.

Delivering Interactive Graphics Wirelessly to an LED Matrix

Driving interactive graphics from the framebuffer of a mobile device to a matrix of LEDs has involved optimizing steps in the pipeline on a case-by-case basis rather than trying to get it all to work at the same time.